Reports

apiUi offers some very important reports that will speed up testing activities.

Coverage report

Regression report

Test summary report

Schema compliance report

Coverage report

Test coverage tells the degree to which the available elements of the api's are tested.

The higher percentage of elements are tested, the lower the change that a bug is not detected. This report is helpful in determining whether or not your test is complete.

apiUi creates, based on the available operations, a kind of a super data-type and scans the log lines in the messages tab of the log panel counting which elements are used in these messages. In that super data-type enumeration values are treated just like sub elements.

To create this report choose Log->Coverage report from the menu.

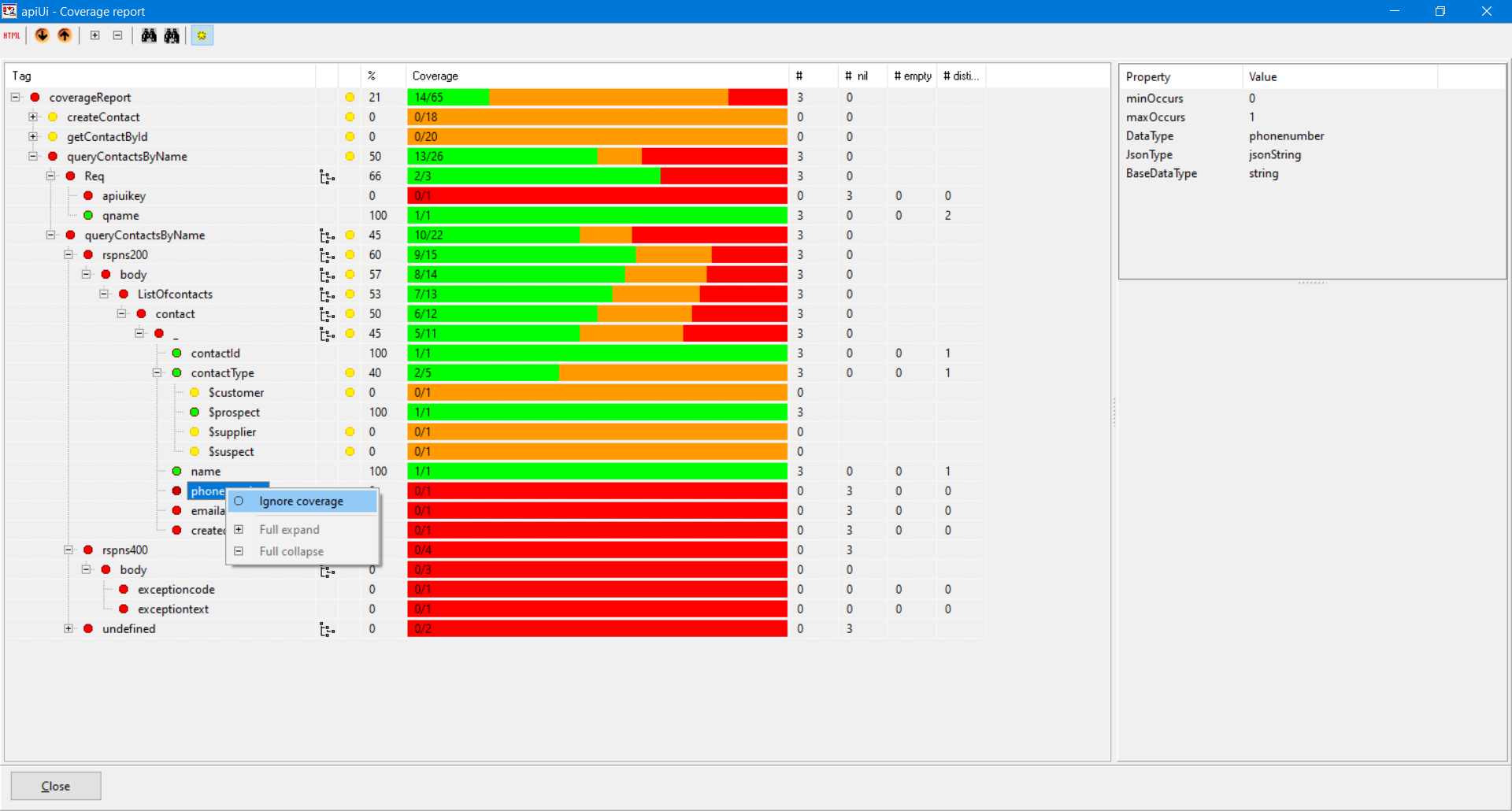

the apiUi Coverage Report. Easy to see that up till now only response code 200 is tested

The report columns

Tag

Shows the structure of the super data-type.

Ignorance

Indicates that the item itself or somewhere in the down-line at least one item is ignored.

Percentage

The percentage of the covered items.

Coverage

This colored column shows the coverage of that element.

Green represents covered.

Orange represents ignored.

Red represents not covered.

Visibility of orange depends on the toggle state of the Show ignored elements tool button.

The text in this column shows the number of covered items versus the total number of (sub)items.

Count

The number of times the item was found in the log.

Count nil

The number of times an item was not found while it's parent was found.

Count empty

The number of times the item was found but contained no value.

Count distinct

The number of distinct values found for the item.

Tool buttons

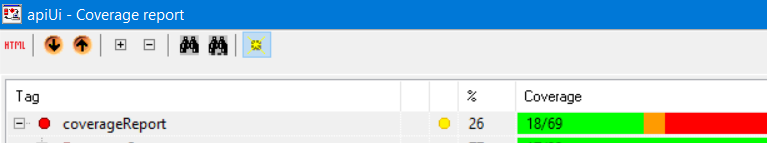

the apiUi Coverage Report Tool Buttons

1 HTML

Open HTML version of report in the default browser.

2 Down arrow

Jump to next not covered element.

3 Up arrow

Jump to previous uncovered element.

4 Full expand

Full expand root element.

5 Full collapse

Full collapse root element

6 Find

Find name or value.

7 Find next

Repeat find

8 Show ignored elements

In most cases you will not want to test every available element or value. Therefor it is possible to ignore certain parts. Use this button to toggle visibility of ignored elements or values. Toggle visibility and you will see changes in the numbers and percentages.

Context menu

Ignore coverage

Choose this context menu item for parts that you do not want to test because of lack of relevancy.

The orange color in the Coverage columns represents the share of ignored items. You can undo the ignorance of an item by the then available context menu item

Take coverage into account. Note that changing ignorance of items means a change to your project design.

Full expand

Fully expand the selected item.

Full collapse

Fully collapse the selected item.

Regression report

Most test tools support regression testing by letting test engineers enter expected values. A lot of work and probably most regression errors found this way will be due to errors in data entry instead of due to real bugs. apiUi uses a different approach. It uses snapshots to test if a certain scenario passes or fails. After the very first run of a scenario, all log lines are stored in a snapshot and manually checked by the test engineer. Each error found is reported as per the teams way of working and this first snapshot is promoted to reference. After deploying of the bug fix release, the same scenario is run again, saved to the same snapshot and, now automatically, compared with the reference snapshot. apiUi's regression report will now hopefully show only expected differences because of the corrected errors. If necessary, repeat the described steps. Fortunately, all of the steps in apiUi can be automated.

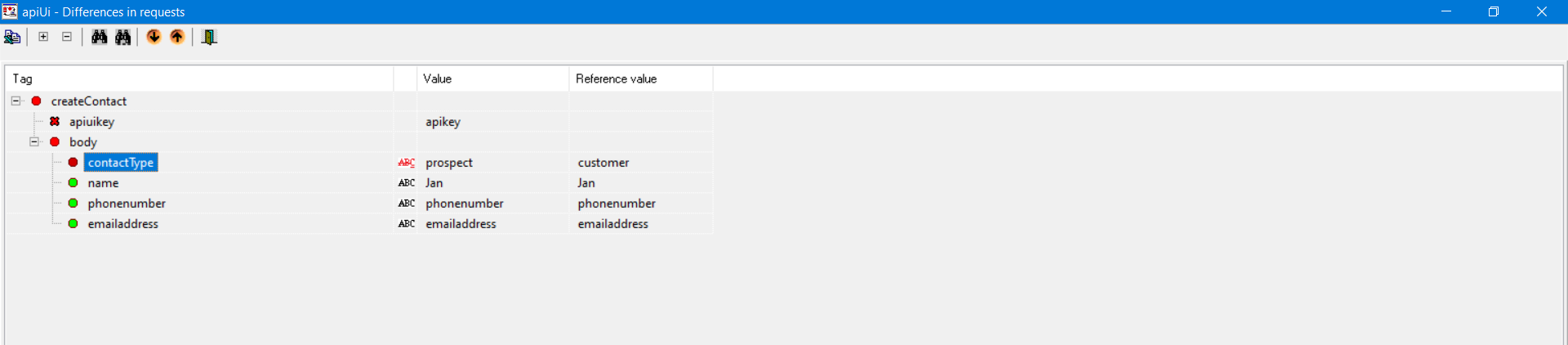

Suppose that the tester reports next issues after having run a test with some createContact calls,

- apiuikey in the request-header is always missing

- contactType is filled with 'customer' instead of 'prospect'

The developer deploys a bug fix and the test is run again and the new snapshot is compared by apiUi with the reference snapshot.

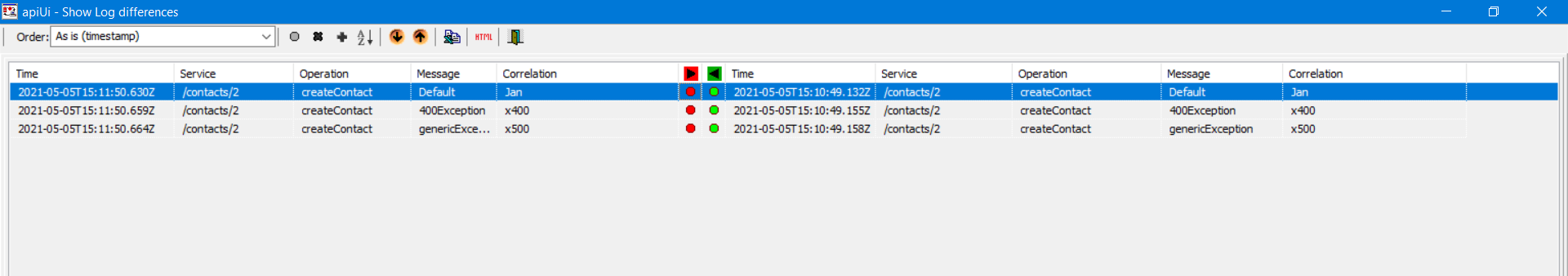

The regression overview as shown by the apiUi GUI (find details here).

The left halve of the screen shows data from the current test run, the right halve shows data from the reference run and

in the middle there are two columns with bullets, the left one is for requests, the right one for responses.

A green bullet tells no differences were found, red means at least one difference was found. To find out the exact differences, click on such a bullet and a details report will pop-up.

Regression details as shown by the apiUi GUI (find details here).

This detail screen shows how you would need to change the current message to become the same as the reference message.

In this case the report shows intended differences only, so the bug was fixed successfully. This also means that this new snapshot is a good candidate to act as reference

snapshot for next release of the system-under-test.

In practice it will often be the case that requests and responses will always differ because of timestamps and generated keys. For that reason the first comparison with the reference will most of the time show differences that can be ignored by the regression report. How to ignore certain changes is described here.

Note that changing ignorance of items means a change to your project design.

For CI/CD pipelines an api exists (/apiUi/api/snapshot/checkregression) that reports ok/nok.

Regression Overview

the apiUi Regression Report Tool Buttons

- Order

- Ignored differences

- Ignored additions

- Ignored removals

- Additional sort elements

- Down arrow

- Up arrow

- Copy (Spreadsheet format)

- HTML

- Exit

1 Order

In some situations, e.g. when the system-under-test does parallel processing, the order of calls may differ from run to run. You can specify a sorting order so that apiUi will be able to match log-entries from current run and the reference run. A disadvantage of sorting is that an unwanted change in order of service calls will not be noticed.

- As is (timestamp)

- Service, Operation

- Service, Operation, Correlation

2 Ignored differences

Opens a form where you can maintain the ignored differences

3 Ignored additions

Opens a form where you can maintain the ignored additions

4 Ignored removals

Opens a form where you can maintain the ignored removals

5 Additional sort elements

Opens a form to maintain the extra sort elements

6 Down arrow

Jump to the next row with a difference

7 Up arrow

Jump to the previous row with a difference

8 Copy (Spreadsheet format)

Copies this overview so it can be pasted in a spreadsheet

9 HTML

Shows an HTML report in the default browser

10 Exit

Leave this screen

Regression Details

the apiUi Regression Details Tool Buttons

- Copy data (Spreadsheet format)

- Full expand

- Full collapse

- Find

- Find next

- Jump to next difference

- Jump to previous difference

- Exit

1 Copy data (Spreadsheet format)

Copies data to the clipboard so it can be pasted in a spreadsheet.

2 Full expand

Expand all structure.

3 Full collapse

Collapse whole structure.

4 Find

Find element on name or value.

5 Find next

Find next.

6 Jump to next difference

Jump to next element that differs.

7 Jump to previous difference

Jump to previous element that differs.

8 Exit

Leave screen.

How to ignore certain differences

To ignore certain changes, use the context menu. Note that changing ignorance of items means a change to your project design.

Ignore differences on: unqualified name (starts with *.)

Ignore differences for all elements with the unqualified name. Applicable mostly for timestamps and generated keys.

Ignore differences on: qualified name

A more safe way is to ignore differences for the specific element. Applicable mostly for timestamps and generated keys.

Check regular expression on: qualified name

Instead of checking for differences you can check if the value matches a regular expression.

Check value partial on: qualified name

Another alternative to check in case fields will always differ is to compare parts of the field.

Ignore adding of: unqualified name (starts with *.)

Applied when a third system adds an optional field that has no impact for the system-under-test.

Ignore adding of: qualified name

Applied when a third system adds an optional field that has no impact for the system-under-test.

Ignore removing of: unqualified name (starts with *.)

Applied when a third system stops sending an optional field that has no impact for the system-under-test.

Ignore removing of: qualified name

Applied when a third system stops sending an optional field that has no impact for the system-under-test.

Ignore all in: unqualified name (starts with *.)

Ignore all differences in elements with the unqualified name and its sub-elements.

Ignore all in: qualified name

A more safe way is to ignore all differences in a specific element and its sub-elements.

Add qualified name to list of additional sort elements

Relevant when your system-under-test is multi threaded and you can not predict the order of calls to the virtualization solution.

See also OrderOfMain.

Ignore order for repeating sub elements for: qualified name

Relevant when the system-under-test produces requests with unpredictable order within repeating groups.

Test summary report

The test summary is a HTML-report and contains three parts.

- Regression report

A pass/fail report showing a summary of the regression report - Coverage report

Shows a summary of the coverage report - Report adjustments

Shows what diffrences are ignored by the report.

Read more on how to ignore differences.

Read more on how to ignore some coverage.

Reporting is done over the currently available snapshots.

Create report from apiUi

In the snapshots tab, press on this button

Create report from a browser

Visit apiUiServerAddress:portnumber/apiUi/api/testsummaryreport.

e.g. http://localhost:7777/apiUi/api/testsummaryreport

Schema compliance report

apiUi can check if messages are valid according schema. See Schema validation for more information.

The most important place to see if messages are compliant with the schema is in the message logging.

For CI/CD pipelines an api exists (/apiUi/api/snapshot/checkschemacompliancy) that reports ok/nok.

Reporting against WireMock

These reports as described here are also available for WireMock users.

Create an apiUi project, introspect relevant provider contracts as described in the Getting Started and set-up a connection with your WireMock simulation-solution as described here, choosing WireMock as connection-type.

Reading logs from WireMock can then be done in various ways:

- Pressing the Log messages from remote server button

- By executing the script LogsFromRemoteServer

- By calling the apiUi api FetchLogsFromRemoteServer from any testdriver.

Regression reporting will work without first introspecting provider contracts, other reports require formal descriptions like OpenApi or Wsdl.